The Cross Entropy Method

Below is a quick run on the cross entropy method used in reinforcement learning (RL).

What is an RL episode?

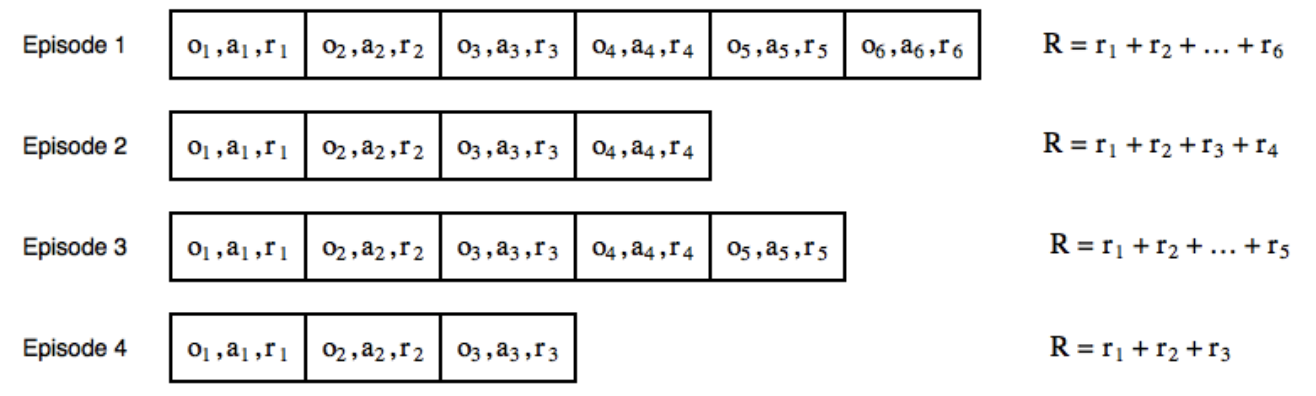

A finite horizon episode in RL is made of a sequence of observations that the agent received from the environment, actions it has issued, and the rewards for these actions until the goal is reached. A list of episodes, each with their own sequences of observations, actions and rewards are shown in the figure1below.

Sample episodes with their observations, actions, and rewards

Cross Entropy Method

Below are the steps for the cross entropy method:

- Let’s say we have an RL agent that interacts with the environment to generate \(N\) episodes. For each of these \(N\) episodes, we would have \(N\) scalar values, where each value represents a cumulative reward or

returnfor that episode. - The

cross entropymethod then simply uses the top \(X\) out of the \(N\) number of episodes to train the RL agent. The value of \(X\) could be defined based on some threshold value of rewards. Usually, we use some percentile of all rewards, such as 50th or 70th. - Once the RL agent is trained on these \(X\) best episodes, it interacts with the environment again to generate more episodes (Step 1). Thus, the entire training process is repeated to further refine the RL agent until we are satisfied with it’s performance.

This is the core idea behind the cross entropy method that generally works well for basic environments and serves as a good baseline.

1: Lapan, Maxim. Deep reinforcement learning hands-on. Packt publishing, 2020.